Streaming media refers to the capability of playing media data while the data is being transferred from server. The user doesn't need to wait until full media content has been downloaded to start playing. In media streaming, media content is split into small chunks as the transport unit. After the user's player has received sufficient chunks, it starts playing.

From the developer's perspective, media streaming is comprised of two tasks, transfer data and render data. Application developers usually concentrate more on transfer data than render data, because codec and media renderer are often available already.

On android, streaming audio is somewhat easier than video for android provides a more friendly api to render audio data in small chunks. No matter what is our transfer mechanism, rtp, raw udp or raw file reading, we need to feed chunks we received to renderer. The AudioTrack.write function enables us doing so.

AudioTrack object runs in two modes, static or stream. In static mode, we write the whole audio file to audio hardware. In stream mode, audio data are written in small chunks. The static mode is more efficient because it doesn't have the overhead of copying data from java layer to native layer, but it's not suitable if the audio file is too big to fit it memory. It's important to notice that we call play at different time in two modes. In static mode, we must call write first, then call play. Otherwise, the AudioTrack raises an exception complains that AudioTrack object isn't properly initialized. In stream mode, they are called in reverse order. Under the hood, static and stream mode determine the memory model. In static mode, audio data are passed to renderer via shared memory. Thus static mode is more efficient.

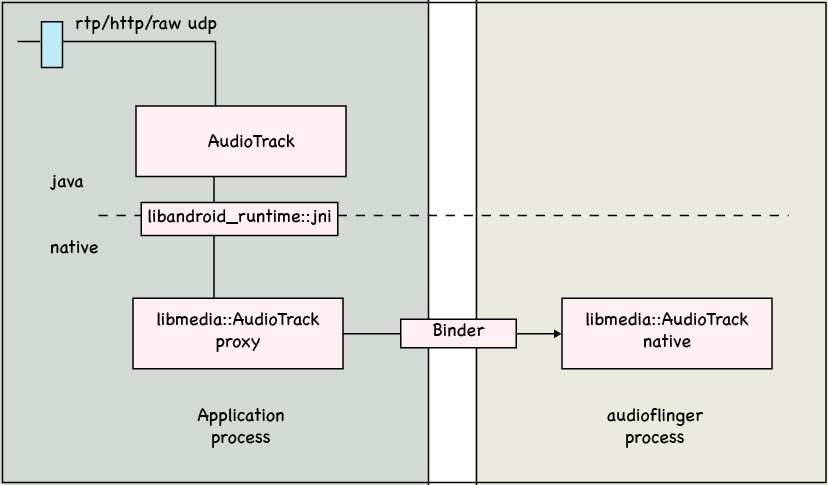

From birds eye view, the architecture of an typical audio streaming application is:

Our application receives data from network. Then the data will be passed to a java layer AudioTrack object which internally calls through jni to native AudioTrack object. The native AudioTrack object in our application is a proxy that refers to the implementation AudioTrack object resides in audioflinger process, through binder ipc mechanism. The audiofinger process will interact with audio hardware.

Since our application and audioflinger are separate processes, so after our application has written data to audioflinger, the playback will not stop even if our application exits.

AudioTrack only supports PCM (a.k.a G.711) audio format. In other words, we can't stream mp3 audio directly. We have to deal with decoding ourselves, and feed decoded data to AudioTrack.

Sample

http://code.google.com/p/rxwen-blog-stuff/source/browse/trunk/android/streaming_audio/

For demonstration purpose, this sample chooses a very simple transfer mechanism. It reads data from a wav file on disk in chunks, but we can consider it as if the data were delivered from a media server on network. The idea is similar.

Update: Check this post for a more concrete example.

Sunday, April 25, 2010

Subscribe to:

Post Comments (Atom)

43 comments:

Thanks for the example!

I'm having a problem on my HTC Incredible though. I'm using the streaming mode and all I hear back is static sound.

Do you have any idea why this is?

I'm stumped and I came along to you post and thought i was in luck, hopefully I still am.

I'll keep testing until you comment back. I'm under a time crunch (as usual, right?).

Sorry a bit more information might help. I'm reading a .pcm file from the phone's SD storage card.

You can try a .wav file, which contains a RIFF header section before the actual sound data.

A .pcm file doesn't include this sesion, and may not be recognized by AudioTrack.

Okay, thanks. I did also read lately something about starting at 41 for the offset to skip the header stuff... but I tried it both in the bytesWritten += track.write(buffer, 41, bytesRead); and bytesRead = audioStream.read(buffer, 41, bufferSize); to no avail. I'll try the .wav. I thought this worked with .pcm. Otherwise I'll go the .wav way.

If you run into something can you please post again here? Thanks again for your time and relies back. It's appreciated.

I tried the .wav approach, same staticy sound, identical. If I speak loud next to the mic, play it back, I can hear my voice but it's barely audible and it sounds a bit choppy. Wonder if this is some sort of buffering issue.

Hmmm...

Why you skip the first 41 bytes?

Based on my understanding, the raw pcm file lacks the header which is needed the AudioTrack. So, instead of skipping the first 41 bytes, you may need to prepare correct header(41 bytes?) and feed it to AudioTrack before write your pcm data.

Are you sure your mic is working fine?

What about downloading some known-to-be-good wav files from web and try playing them?

Ya, my mic seems fine. I was able to offload the .pcm and play it in Audacity, importing it.

AudioTrack supports pcm, http://developer.android.com/reference/android/media/AudioTrack.html.

So, I'm stuck here scratching my head. I've got a burning sensation that I'm close to having this work... all of the latency issues are gone. No matter the length the files plays now. I just need to get it to plan "clean".

Well, I'm back to looking at this again today so I'll see what I come up with.

Thanks for your responses, again.

Hi,

Is it possible to play audio directly from a network stream, without saving to a file first?

I need playback to happen realtime (for voip type app) so I don't really want to buffer to a file.

I'm capturing audio with AudioRecord and then sending it with rtp (jlibrtp). I receive the data stream, but I can't figure out how to play it.

Any help in this regard would be appreciated.

Thanks!

Werner,

Yes, it's very possible. As you can see on line 80 of my sample code, the source audioStream is an instance of FileInputStream. You may consider replace it with a BufferedInputStream object that read rtp packet coming from network.

Hope it helps.

heroThanks for your help.

I'm having trouble implementing it. I'm guessing I first need to create an InputStream to create the BufferedInputStream with, but I can't figure out how to set the source of the InputStream to the rtp stream that I'm receiving.

At the moment I'm trying to use stuff like URLConnection.getInputStream, or Socket.getInputStream, but it seems neither are available/applicable for UDP.

How do I set the source of the BufferedInputStream to my incomming rtp stream?

Thanks again.

Actually, it's not necessary to use an inputstream. :)

On line 88, I read some data from the stream into a buffer, which is a byte array. You can read data from udp socket here. Then store data in a buffer, and feed to the track for playback.

I'm sorry that my previous suggestion narrows your mind.

Hi,

I am trying to get the audio stream from the ip camera and play it in android phone.

for this i am using http:///axis-cgi/audio/receive.cgi.

can you please help me how can i solve this?

Thanks & Regards,

Karumanchi

hi Karumanchi,

I think your objective can be broken down into several subtasks.

1. receive sound packets from IP camera and save in local buffer

2. decode sound packet to raw pcm data

3. feed data to AudioTrack

BTW, what is the url you gave in the comment?

thanks for your reply.

in order to receive the audio from an ip camera, we can take using http request for e.g. 'http://10.13.16.177/axis-cgi/audio/receive.cgi'.

now i should play in android phone. cant this problem be resolved without buffers or with player.

If I remember correctly, MediaPlayer class can play http based audio content. It uses decoders internally, so you don't have to pass pcm data to it.

What's the reason of not using Player or AudioTrack class? They provide a convenient wrapper on native decoders and should be good candidates for your task.

Thanks for your reply!

actually my task is to communicate the ip camera and android phone by voice chat over lan but using tcp connection.

since i cant make full duplex connection i am trying to design half duplex communication first so that i can hear the voice of ip camera in android and vice versa.

please help me if you have some suggestions to me.

thanks & regards,

karumanchi

Sorry, I don't quite get what's the difficulty with your task?

Basically, it doesn't matter whether you transfer voice data over tcp or udp. My sample just assume that the voice data can be transferred to your phone.

So, are you having problem with setting up a tcp connection between IP camera and android phone?

thanks!

yes i have problem in making tcp connection between ip camera and android

can you help me how can I proceed to resolve this task?

thanks

karumanchi

Here is a android tcp connection sample:

http://www.helloandroid.com/tutorials/simple-connection-example-part-ii-tcp-communication

It includes both client & server sides.

You can use it as long as you know the ip address and port where the ip camera is listening on.

Hi,

I am doing a project where i would like to receive live streams coming from ip camera.

i am trying to use default android media player in order to play both audio and video. but i am unable to solve this issue. actually, i should use rtsp protocol for this application.

please help me to do so.

kind regards,

karumanchi

The media player supports rtsp protocol. It should be able to receive sdp from the rtsp server, ip camera in your case. But the opencore framework doesn't support streaming a lot of media types, mp3, for example. You may try to stream wav format to see if it works.

Thanks for your reply.

rtsp://my-server/axis-media/media.amp is the default address to stream video and audio from ip camera. i don't know exactly what type of format is this.

can you elaborate more if you have any idea in solving my problem?

actually i tried to write a streaming media player to stream live audio for e.g. playing mp3, its working perfectly. coming to this scenario i don't know have any idea to proceed.

please give me some suggestions if you can?

thanks

karumanchi

You can use tcpdump to find out the sdp contained in the rtsp protocol, it should describe the media type of the audio and video.

And you can also use logcat to see the log of the opencore media framework, it may give you some hint in which step it refuses to play the media.

Hi, I want to do Video streaming through a P2P middleware... So I can't use http/rstp links. I just have file chunks, any idea on how to play that?

As far as I know, the java layer api (MediaPlayer) doesn't allow you feed media chunks to it directly.

Maybe you can create your own media streaming server which will receive data through p2p and reassemble file chunks as valid http/rtp stream to MediaPlayer.

Of course, there will be performance penalty since data are transferred more than once.

Thanks, I'll try that :-)

I don't have much knowledge on http/rstp protocols, if you could point me some tutorials on reassembling chunks to those protocols, it'd be very appreciated

Good example... Considering the lack of my development experience with Android, where do you place the 1.wav file on the workspace so that it is available to the app? Do you have to copy it to the emulator somehow? I tried to copy under C:\Program Files\Android\android-sdk-windows\tools where I have my emulator.exe on my windows machine, but didnt help. fileSize always reads 0 for me.

Thanks.

I copied the wav file to emulator with adb push command.

HI sir,

i used ur code to play the audio.its working fine ,i need to play the stream audio data based on rtp, can u suggest the idea about how to store the chunk data in buffer which streamed thru rtp

thanks in advance

vignesh,

Please checkout this post for a sample shows how to send data over network.

I'm new to Android app. Is there any sample code to play short audio clip when user click on the text or button? Basically there are hundred of short audio phrases that associated with some clickable text or button I want user to be able to click on and hear it.

I'm thinking about static AudioStream but not so sure.

Thanks.

hi,

I want to split my audio files as 1 mb. for eg, if the file exceeds more than 5 mb or 3 mb. i want to split into 1 mb. pl guide me how to do tat

Hi priya. Can you elaborate more? I don't get your requirement.

can you plz help me i need to stream the audio while it is being recorded .

what is the code i have to write for buffering audio and ssending it at the same time .

This is a great blog. Audio-streaming is most popular over the internet for things such as radio, music, television and sports among other programming choices. Most providers of Audio-Streaming to have listeners worldwide because their service is available for free.Thanks for all your hard work and the info you give.

wow its great post..

I get a url multicast (udp, rtmp, mms..) (like IPTV)

Can I stream it on android ?

Please help.

Thanks for the example!

Actually i want to create an app that reads and plays simultaneously (audio and written data should be stored once downloaded)

Can you help me in building such software ???

In the event that I talk loud alongside the mic, play it back, I can hear my voice however its scarcely capable of being heard and it sounds a bit rough. Wonder if this is a buffering issue. One of my friend recommend for a live local audio streaming app. Mappstream will lead the way! It’s amazing. More insights to the app can be found here:

https://itunes.apple.com/us/app/mappstream/id735066334?mt=8

HI

I want to stream mp3 songs between android devices.

can someone help me out with it....

hi.i want to send audio or voice stream from an android device to multiple android device.can anyone help me in that??

Post a Comment